Bio: I am currently a Postdoctoral Scientist at AWS Agentic AI and an incoming Assistant Professor in the Department of Computer Science at University of California, Los Angeles (UCLA).

I completed my Ph.D. in Computer Science at Columbia University, advised by Prof. Baishakhi Ray and Prof. Gail Kaiser. Previoulsy, I have also had wonderful experiences at Google DeepMind, Amazon AWS AI Labs, and IBM Research.

Research: My research focuses on developing large language models (LLMs) and agentic systems for software engineering (SE). Most recently, I am interested in training LLMs with advanced symbolic reasoning capabilities (e.g., debugging, testing, program analysis, verification) and building efficient, collaborative agentic systems for complex software development and maintenance tasks.

For an overview of the research that I have done, this video might help.

🎯 I am actively looking for students to join my research group @ UCLA CS. Solid coding skills and experiences in large language models (pre-/post-training or agentic systems), program analysis and verification, or security are strongly preferred.

🤝 If you are interested in working with me, drop me an email with (1) your CV and (2) a brief introduction of your research interests and background.

|

News

📣 May. 2025: I will join the Department of Computer Science @ UCLA as an Assistant Professor!

⭐ Sep. 2024: "SemCoder: Training Code Language Models with Comprehensive Semantics Reasoning" got accepted by NeurIPS 2024.

🎉July 2024: "Vulnerability Detection with Code Language Models: How Far Are We?" got accepted by ICSE 2025.

May. 2024: I joined Google DeepMind as a Student Researcher, working on Code LLMs. May. 2024: I joined Google DeepMind as a Student Researcher, working on Code LLMs.

|

|

Co-PatcheR: Collaborative Software Patching with Component(s)-specific Small Reasoning Models

Yuheng Tang, Hongwei Li, Kaijie Zhu, Michael Yang, Yangruibo Ding, Wenbo Guo

NeurIPS 2025

|

|

SemCoder: Training Code Language Models with Comprehensive Semantics Reasoning

Yangruibo Ding,

Jinjun Peng, Marcus J. Min, Gail Kaiser, Junfeng Yang, Baishakhi Ray

NeurIPS 2024

|

|

Vulnerability Detection with Code Language Models: How Far Are We?

Yangruibo Ding, Yanjun Fu, Omniyyah Ibrahim, Chawin Sitawarin, Xinyun Chen, Basel Alomair,

David Wagner, Baishakhi Ray, Yizheng Chen

ICSE 2025

|

|

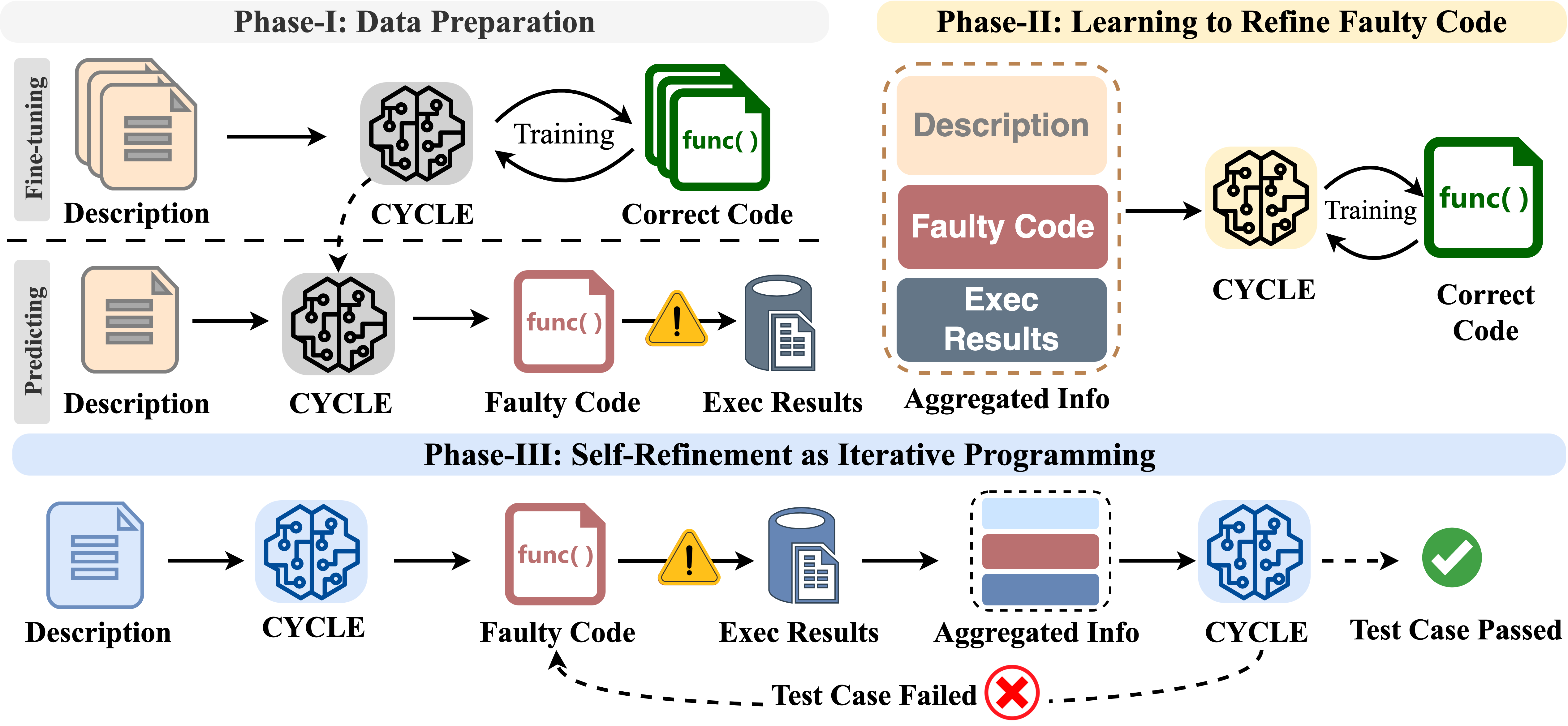

CYCLE: Learning to Self-Refine Code Generation

Yangruibo Ding,

Marcus J. Min, Gail Kaiser, Baishakhi Ray

OOPSLA 2024

|

|

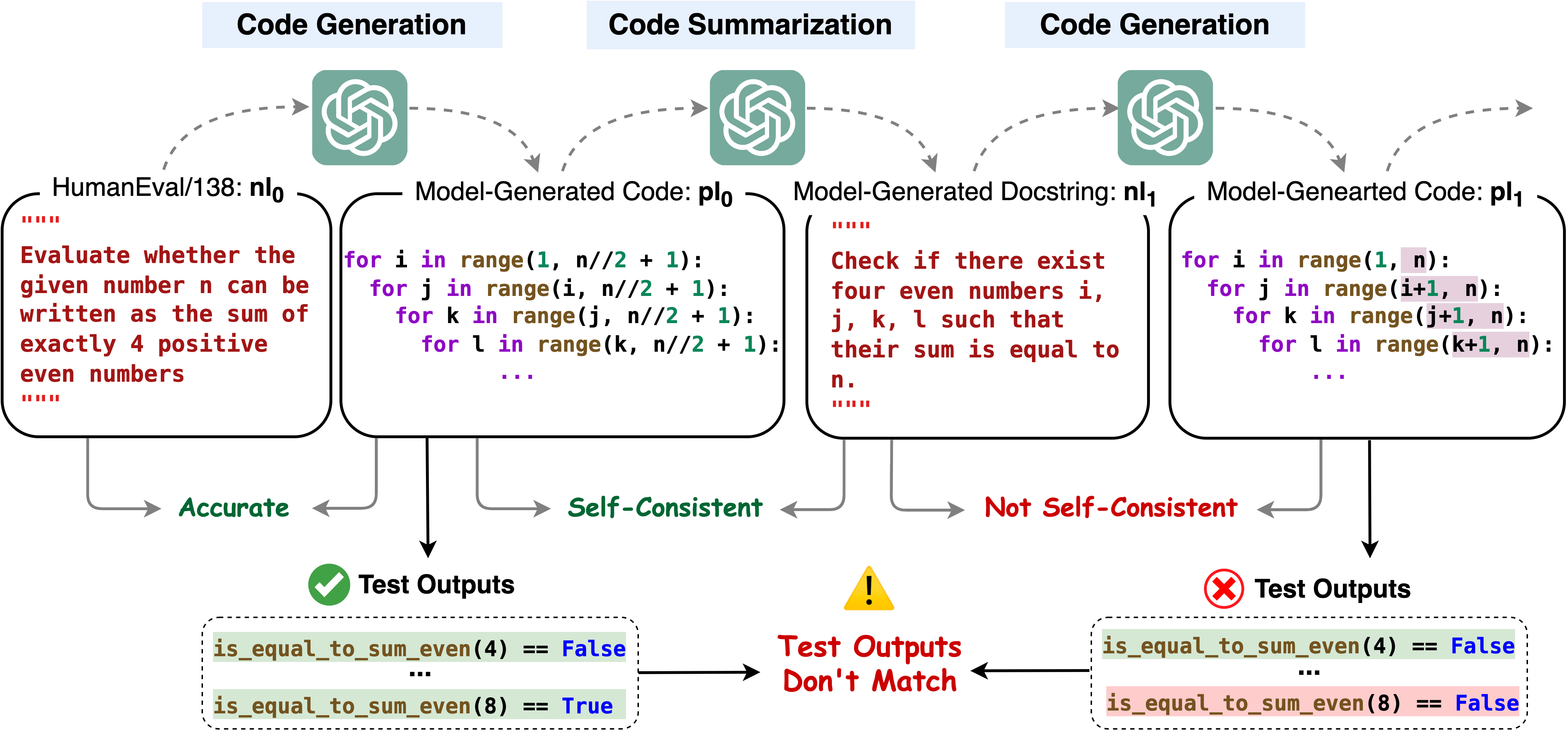

Beyond Accuracy: Evaluating Self-Consistency of Code Large Language Models with IdentityChain

Marcus J. Min, Yangruibo Ding, Luca Buratti, Saurabh Pujar, Gail Kaiser, Suman Jana, Baishakhi Ray

ICLR 2024

|

|

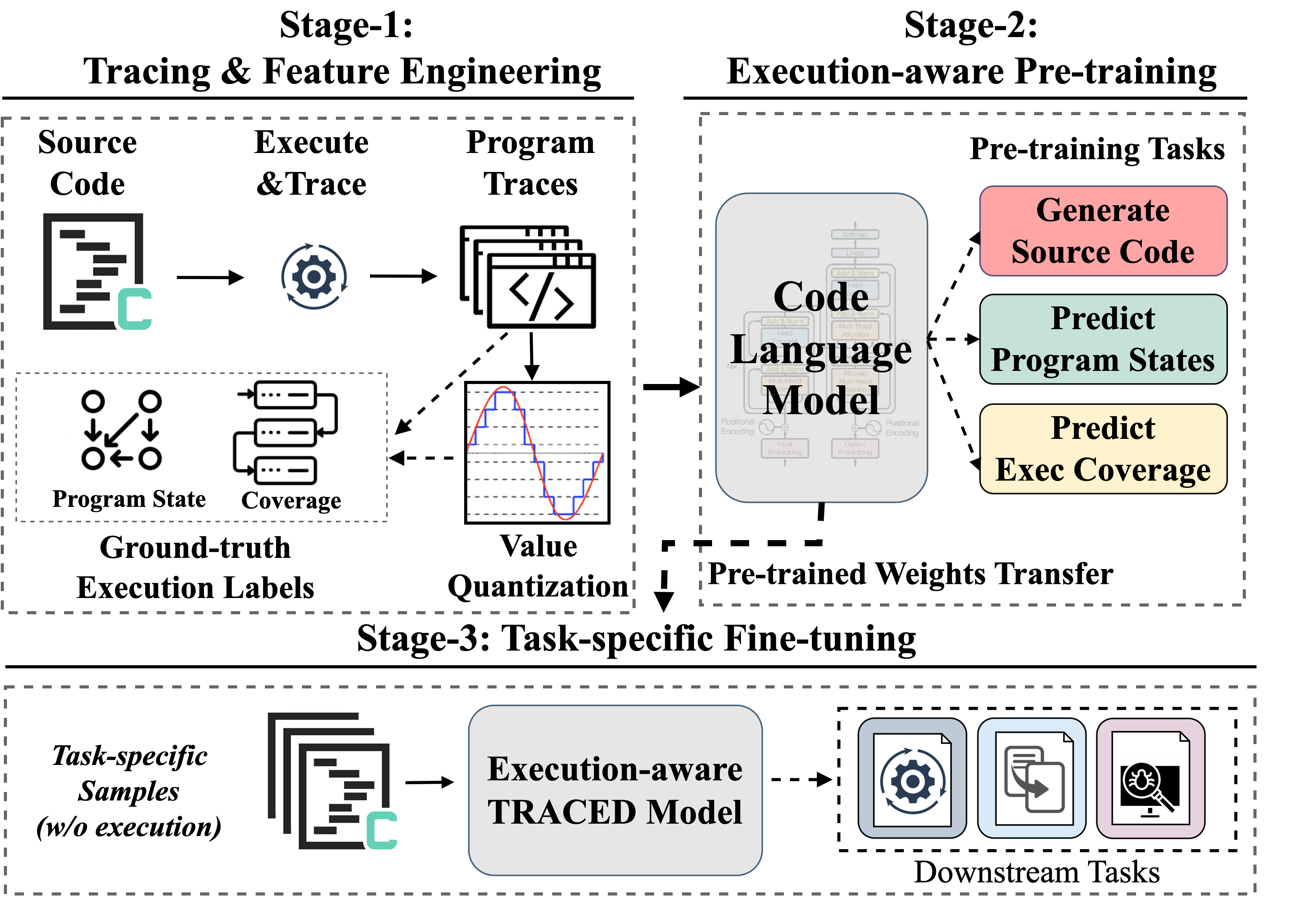

TRACED: Execution-aware Pre-training for Source Code

Yangruibo Ding,

Ben Steenhoek, Kexin Pei, Gail Kaiser, Wei Le, Baishakhi Ray

ICSE 2024

|

|

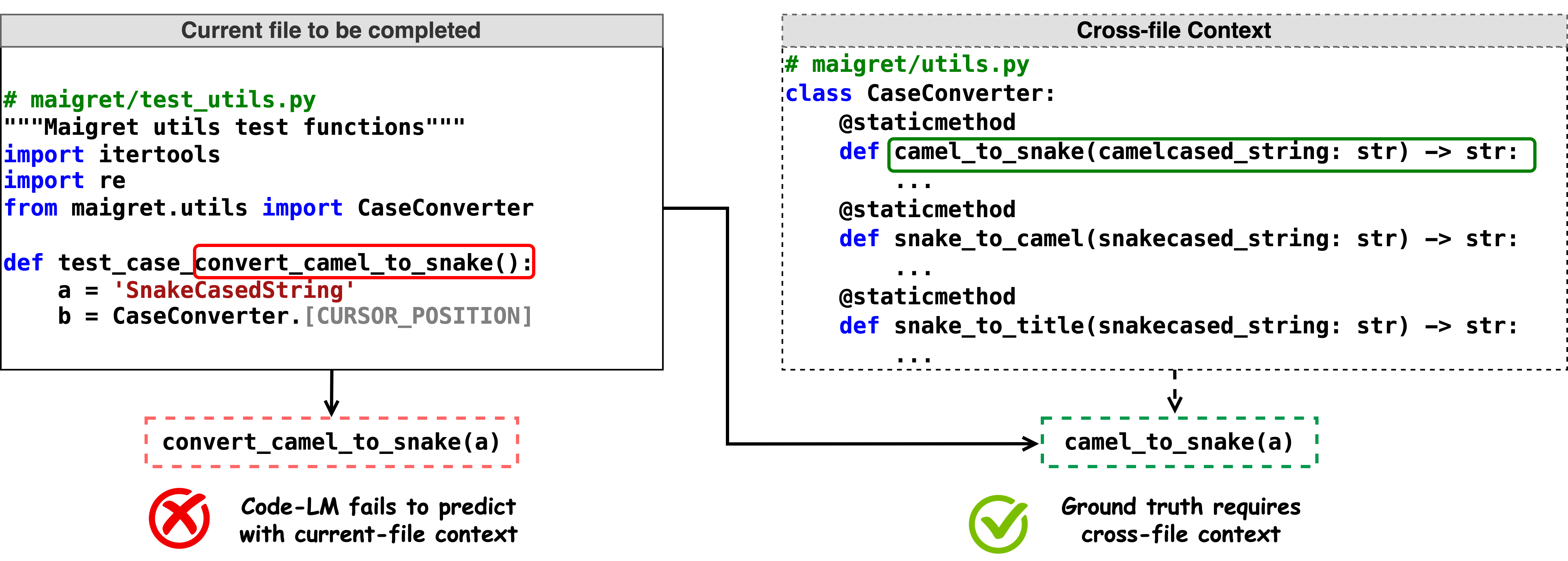

CoCoMIC: Code Completion By Jointly Modeling In-file and Cross-file Context

Yangruibo Ding*,

Zijian Wang*, Wasi Uddin Ahmad*, Murali Krishna Ramanathan, Ramesh Nallapati, Parminder Bhatia, Dan Roth, Bing Xiang (* equal contribution)

LREC-COLING 2024

|

|

CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion

Yangruibo Ding*,

Zijian Wang*, Wasi Uddin Ahmad*, Hantian Ding, Ming Tan, Nihal Jain, Murali Krishna Ramanathan, Ramesh Nallapati, Parminder Bhatia, Dan Roth, Bing Xiang (* equal contribution)

NeurIPS 2023 (Datasets & Benchmarks)

|

Recent Talks

-

Feb. - Apr. 2025: "From Code Generation Towards Software Engineering: Advancing Code Intelligence w/ Language Models" @ UW, UMD, CMU, UCLA, UTD, JHU, Georgia Tech, Stony Brook, Dartmouth, NUS.

-

Oct. 2024: "Training Code Language Models with Comprehensive Semantics Reasoning" @ UIUC.

-

Oct. 2024: "Semantic-aware Source Code Modeling" @ UMD, NCSU, ASE'24.

-

Aug. 2024: "Training Code Language Models with Comprehensive Semantics Reasoning" @ Google DeepMind.

-

Apr. 2024: "Vulnerability Detection with Code Language Models: How Far Are We?" @ Columbia SSS Seminar.

|

Services

Program Committee

Conference Reviewer

Journal Reviewer

|

Last Updated: July 2025.

Photo by Lingyi. Website Template by Jon Barron

|

|